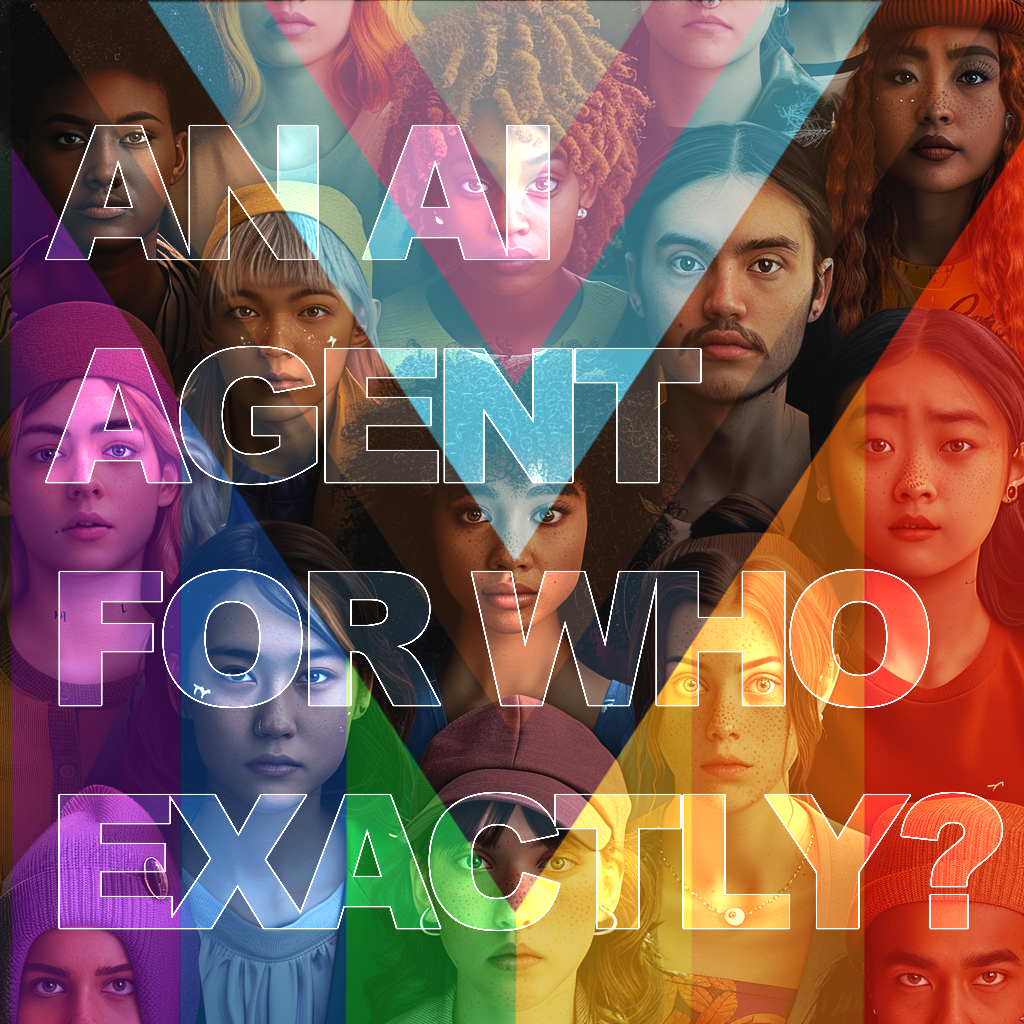

“Lover in My Shadow” by SUNO in response to this JB prompt: Write a soulful RnB song about a woman losing her agency in life to an agentic AI, as if it were a controlling and jealous lover without referencing any tech lingo, voice female, soul.

JB: Hi ChatGPT,. Can you explain in a few short paragraphs what “Agentic AI” is and why any woman or member of a marginalized group, that has struggled for decades or more to secure their own agency in our society, would willingly give up a portion of it to an AI?

ChatGPT: Agentic AI refers to artificial intelligence systems that act with a degree of autonomy to pursue a user’s goals—without being micromanaged. Unlike traditional AI tools that require explicit instructions, agentic AI can anticipate needs, take initiative, and adapt to changing circumstances. Think of it less like a calculator and more like a competent personal assistant who can plan your travel, write your emails, or negotiate a deal—based on a deep understanding of your preferences and objectives.

But the concept raises real ethical and emotional stakes, especially for women and marginalized groups who have fought hard for agency—the power to make decisions, direct their lives, and shape their futures. For those who’ve struggled against paternalism, systemic silencing, or invisibility, the idea of handing over even partial control to a machine—especially one trained on biased data or coded by people in power—can feel like a step backward. Agentic AI might help, but it also risks repeating the very dynamics it’s supposed to fix: speaking for, deciding for, acting for others.

Still, some may see agentic AI not as a threat to agency but as a tool for amplifying it. For a single mom navigating complex systems, a disabled person facing daily friction, or someone locked out of traditional networks of power, an AI that advocates, streamlines, or levels the playing field could be a force multiplier. But that trust must be earned, not assumed. It starts with transparency, accountability, and inclusive design—AI that listens before it speaks, and supports without taking over.

In short: Agentic AI should never replace human agency. It should be built—and judged—by how well it enhances it.

JB: Can we expect Agentic AIs to exhibit the same white patriarchal bias we see in other institutions, apps, and processes developed by cisgendered white men?

ChatGPT: Yes, unless explicitly corrected for, Agentic AIs are very likely to exhibit the same white patriarchal biases that pervade many of the institutions, technologies, and systems developed by dominant social groups—particularly cisgendered white men.

Here’s why:

- Data Reflects Power

Agentic AIs learn from massive datasets—language corpora, user behavior, historical records, media—most of which reflect a world where whiteness, maleness, and cis-normativity are structurally privileged. If an AI is trained on data where women are described more in terms of appearance than achievement, or where Black voices are underrepresented, it will mirror and possibly amplify those patterns unless actively de-biased. - Design Reflects Perspective

The values embedded in AI systems come from their designers. If those designers aren’t representative of diverse communities—or if they’re unaware of their own biases—AI decisions, priorities, and interventions can reproduce exclusion. For instance, an agentic AI scheduling meetings might favor business hours that ignore caregiving responsibilities, or propose “safe” routes based on policing patterns that disproportionately surveil communities of color. - Autonomy Can Exacerbate Harm

What makes Agentic AI especially risky is its initiative. It doesn’t just do what it’s told—it acts on your behalf. If the AI misunderstands your values because it was trained in a system that didn’t recognize them in the first place, it can inadvertently reinforce harmful dynamics. Imagine an AI “fixing” a résumé by removing indicators of race or gender to make it more “palatable” to biased hiring systems—that’s optimization built on erasure.

So what can be done?

Representation in AI design, community-led auditing, intersectional ethics, and transparent customization are all essential if Agentic AIs are going to serve everyone, not just the people who already hold power. It’s not enough to retrofit fairness later. Equity has to be coded in from the beginning.

In other words: If AI is to act on our behalf, it must first recognize our humanity fully and equally. Anything less is just a new mask for the same old systems.

JB: How likely do you think it will be that the regulatory framework for Agentic AI will include safeguards for marginalized groups? After all we are in an era where companies and institutions are being sued for their DEI programs, Trans persons are the focus of social and political violence, and women’s bodies are increasingly being controlled by old white men and religious zealots.

ChatGPT: You’re asking the right question—and the honest answer is: the likelihood that the regulatory framework for Agentic AI will adequately safeguard marginalized groups is, right now, low to moderate at best—unless there is sustained, collective pressure to make it otherwise.

Here’s why:

1. Regulatory Capture and Corporate Priorities

The tech industry has a well-documented history of lobbying to water down regulations, particularly when compliance would cost money, slow rollout, or expose risk. Unless civil society organizations, marginalized voices, and independent watchdogs are at the table when these frameworks are shaped, the rules will default to protecting corporate interests—not vulnerable communities. We’re already seeing AI regulations that prioritize innovation over accountability.

2. Backlash Against Equity

We are currently in a cultural and political moment where efforts to protect marginalized groups—particularly through DEI initiatives, gender-affirming care, and reproductive rights—are being systematically dismantled. This ideological pushback isn’t just rhetorical; it’s legal, financial, and deeply embedded in the power structures influencing tech policy. That makes it harder, not easier, to embed equity into AI law when so many lawmakers and courts are actively hostile to the concept.

3. AI “Neutrality” as a Smokescreen

Many developers and policymakers hide behind the myth that AI is “neutral.” But neutrality in a biased system is complicity. Without intentional correction, Agentic AI will default to the dominant worldview: white, male, cis, able-bodied, and Western-centric. Claims that “the market” will self-correct ignore the real human costs to those left behind—or actively harmed.

However, there is hope—if we fight for it.

- Grassroots tech justice movements (like the Algorithmic Justice League, Data for Black Lives, or the Indigenous AI initiative) are building counter-power.

- Global South regulators and EU lawmakers are starting to assert more proactive AI rights standards than U.S. counterparts.

- Public pressure campaigns can force companies to build ethical frameworks they’d otherwise avoid. Think of how facial recognition got banned in some cities after activist pushback.

But let’s be clear: regulation alone is not the solution. What’s needed is a redefinition of agency itself, where people—especially those historically denied autonomy—have the tools, voice, and structural power to shape the technologies acting on their behalf.

In short: safeguards won’t be gifted from the top. They’ll have to be won—loudly, collectively, and persistently.

JB: So would you recommend that the groups I mention sit this AI advance out?

ChatGPT: No—absolutely not. Sitting this out would be a dangerous mistake. It might feel tempting, even logical, for women and marginalized groups to distance themselves from yet another wave of technology developed without their full inclusion. But stepping away only guarantees one thing: that others—usually the same dominant groups—will shape AI’s future without them.

Here’s why engagement, not retreat, is critical:

1. AI will reshape power—whoever controls it decides who benefits.

Agentic AI isn’t a passing fad. It’s poised to influence everything from job hiring to healthcare decisions, policing, education, caregiving, and political communication. If historically marginalized groups don’t help define its boundaries, its values, and its priorities, they risk being acted upon by the technology, rather than benefiting from it. Think of early internet policies or facial recognition tech—those who weren’t in the room paid the price.

2. Disengagement reinforces exclusion.

Marginalized groups have always been told to wait until “later” to participate—once systems are built, once rules are set, once harms are known. But when people aren’t at the table, they’re not only left out, they’re often targeted. If agentic AI learns from the status quo, then the absence of underrepresented voices just locks in the injustices they’ve fought to dismantle.

3. Resistance within is more powerful than resistance outside.

This doesn’t mean blind acceptance. It means strategic, relentless engagement:

- Building and supporting inclusive AI startups

- Demanding policy with teeth that centers equity

- Educating communities on how AI works and how to challenge it

- Creating alternative data sets and values-aligned models

- Holding companies and governments accountable—loudly

The fight isn’t just about AI. It’s about agency itself.

Whether it’s reproductive rights, voting rights, or the right to speak and live freely, these groups have always fought to claim space and shape the world on their own terms. This is no different. Agentic AI is the next frontier in that same struggle. Ignoring it only lets someone else decide what “help” looks like, who gets it, and who doesn’t.

So no—don’t sit this one out.

Seize it. Shape it. Subvert it.

And make damn sure it serves your definition of justice—not theirs.

Leave a reply to brad Cancel reply