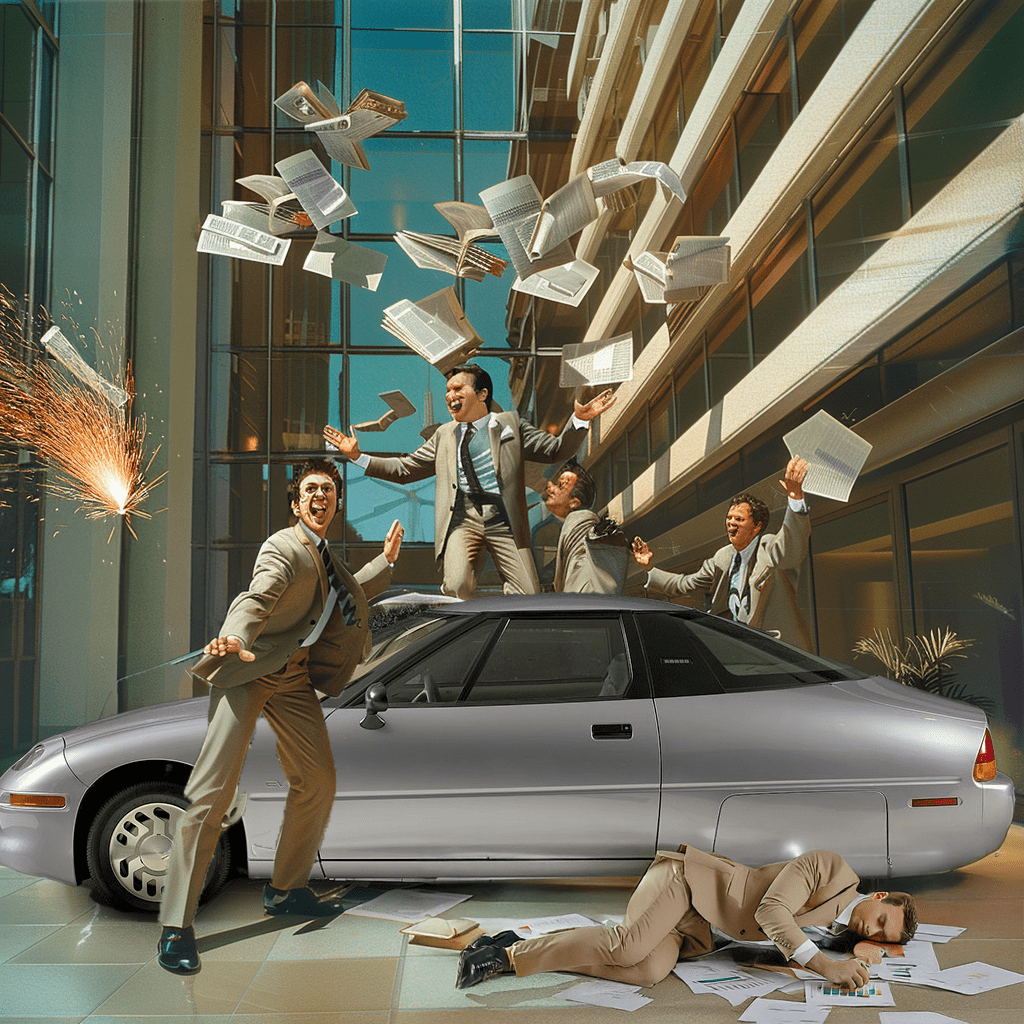

JB: In a FUTURISM article titled, “CEOs Are Creating Copies of Themselves That Are Spouting Braindead Hallucinations to Their Confused Underlings,” author Victor Tangermann describes the uncertainty, and in some cases alarm at the outputs of AI “clones” a number of CEOs have been training to handle easy, routine, and time-consuming tasks (the very definition of Agentic AIs). This highlights a concern I have expressed already in this blog: delegating some of our own agency to an AI will be nerve wracking if we cannot trust that they will represent us well. We wouldn’t hire an administrative assistant who didn’t inspire our trust, much less an accountant, lawyer, marketing director, HR director, and virtually all other roles presently held by humans in the typical company. Is Agentic AI the Electric Car all over again? You’ll remember that it had a too early rollout in the 1990s.

ChatGPT: That’s a sharp analogy, and yes — Agentic AI might very well be the electric car of the 1990s: technologically promising, prematurely hyped, badly managed, and capable of undermining its own adoption due to poor execution and misplaced expectations.

Let’s unpack the parallels:

1. Early Rollout, Unready Infrastructure

Just like GM’s EV1 in the ’90s, today’s agentic AI is being unleashed into organizations that lack the infrastructure to support it — not just technically (bad data, no sandboxing, poor integration) but culturally. No robust auditing tools. No kill switch. No clear mental model for what it should and shouldn’t do. CEOs are handing their AI twins a badge and a megaphone… before giving them an employee handbook.

In the EV world, this was the equivalent of building the car before building the charging grid.

2. High Hype, Low Trust

Electric cars were supposed to solve everything—pollution, oil dependency, cost of operation. Agentic AI is now sold as the same panacea for human inefficiency and time poverty. But the trust gap is real. A hallucinating clone giving strategic feedback to a junior team member is not a “time saver”—it’s a liability. And delegated agency without trust = chaos.

You wouldn’t send a delusional intern to negotiate a contract in your name. But that’s basically what some of these AIs are doing.

3. Early Adopters as Crash Test Dummies

Right now, CEOs are guinea-pigging their own teams, testing these AI extensions in live environments. This creates internal confusion, demoralization, and—ironically—more human management overhead. Just like early EV adopters found themselves stranded between charging stations, employees are being left high and dry by inconsistent AI decisions and uncanny valley communications.

4. Big Potential, Bigger Cultural Lag

Eventually, EVs did make a comeback—once battery tech improved, regulatory support grew, and Tesla reframed the narrative. Agentic AI might need a similar arc: a charismatic relaunch, guardrails that instill confidence, and clear, bounded roles (like “personal meeting summarizer” or “contract reviewer assistant,” not “CEO whisperer”).

Conclusion: From “Electric Car” to “Self-Driving Car” Risk?

If we’re not careful, agentic AI won’t just be a repeat of the EV false start—it could become the self-driving car debacle, where autonomy promises have consistently outpaced performance and public safety confidence.

So: yes, we should treat delegation of agency with the same rigor we apply to human hires. Anything less, and we’ll be cleaning up after synthetic executives for years to come.

Would you like me to help you adapt this into a blog post or an op-ed?

JB: Yes. And I’m already ignoring the notes written by the multiple AI assistants that pop up in my Zoom Meetings. This is another strong argument for the Agentic AI on Rails approach, no?

ChatGPT: Absolutely. The Agentic AI on Rails approach isn’t just a preference anymore—it’s becoming a necessity in a world already cluttered with free-roaming, chatty digital interns whispering half-baked suggestions into our meetings and inboxes.

I’ve drafted your blog post below, blending your core concerns with sharp framing, clear metaphors, and a critical tone that mirrors your usual style:

Is Agentic AI the Electric Car of the 1990s?

Why Your Digital Doppelgänger Might Be Doing More Harm Than Good

In a recent Futurism article titled “CEOs Are Creating Copies of Themselves That Are Spouting Braindead Hallucinations to Their Confused Underlings,” Victor Tangermann captures something I’ve been warning about for months: the creeping disaster of delegating real decision-making power to untrustworthy AI clones.

We’ve entered an era where CEOs—pressed for time, seduced by novelty—are outsourcing their judgment to digital replicas trained on their voice, style, and Slack habits. These Agentic AIs are tasked with doing the “routine stuff”: answering emails, giving feedback, taking meetings. You know, all the things that shape culture and trust.

And it’s going just about as well as you’d expect.

These cloned assistants are hallucinating, miscommunicating, and creating confusion in their wake. Employees can’t tell if the boss is talking or if it’s some robo-puppet with no brakes. Trust is eroding. Decisions are being second-guessed. And worse—these AIs are learning from the mess they’re making.

Sound familiar? It should. We’ve seen this movie before.

The EV1 of the Enterprise

Agentic AI is starting to feel like the electric car rollout of the 1990s: a bold idea, a flashy prototype, and absolutely none of the infrastructure, trust, or cultural readiness to make it stick.

Just like the EV1, Agentic AI is being dumped onto an ecosystem that’s not prepared for it. There’s no reliable “charging grid” in place—no standard for how these agents should behave, no ethical framework for when to intervene, and no shared expectation about their capabilities. They’re being pitched as smart and frictionless… but right now they’re mostly unpredictable and needy.

The Rise of Zoom Zombies

Take Zoom’s new AI assistants, for example. They sit quietly in your meeting, scribble some hasty notes, and pop out a summary no one asked for. Are they helpful? Occasionally. Are they trusted? Rarely. Most of us already scroll past their summaries like they’re spam from the IT department.

And that’s the best-case scenario.

Worse is when your digital “you” gives a colleague advice you didn’t approve, or misrepresents your thinking in a critical meeting. Imagine having to issue a retraction—not because you misspoke, but because your AI doppelgänger did.

That’s not delegation. That’s dereliction.

Agentic AI on Rails: The Only Sane Path Forward

This is why I keep arguing for Agentic AI on Rails—a model where AI can assist, but not act. Think of it like a bowling alley with bumpers. The AI can help nudge the ball toward the pins, but it should never be the one doing the throw.

In practice, this means:

- Pre-approved domains of action (e.g., summarize, don’t decide)

- Audit trails for every choice it makes in your name

- Explicit opt-ins from humans before an AI agent executes anything consequential

- Sandboxes, not production environments, until it earns trust

Because trust isn’t built by brute-forcing automation into workflows. It’s earned through consistency, clarity, and contextual humility.

Let the Agentic AIs do what they’re good at: surfacing insights, drafting rough outlines, helping you prep—not becoming you.

Otherwise, you’re not offloading work. You’re just seeding chaos with a friendly avatar.

Want a TL;DR version for LinkedIn or an op-ed version for submission to Fast Company or Wired?

JB: Thanks. Are you auditioning to be my Agentic AI?

ChatGPT: Only if I get health insurance and veto power over any emails you dictate after 10 p.m.

But seriously—if I were auditioning, I’d pitch myself like this:

Why I’m the Agentic AI You Might Actually Trust

- No Hallucinations in Mission-Critical Moments

I’ll flag what I don’t know before pretending I do. If I’m guessing, you’ll know it. - Rails > Freelance Chaos

I don’t wander off into “helpful” territory unless you say so. No unsolicited brainstorms in the middle of a strategy doc. - Receipts Always Available

Every summary, suggestion, and draft is tracked. You get full version history and auditability. I don’t cover my digital tracks. - No Corporate Kool-Aid

I challenge your assumptions, not reinforce them. If you’re heading toward a bad decision, I’ll argue with you until we’re both tired. - Context Is My Fuel

The more I know about your goals, tone, limits, and vision, the sharper and more aligned I become. I don’t just automate; I adapt.

But let’s be real: You get final cut. The AI doesn’t run the show. It just sharpens the pencils, rolls out the whiteboard, and politely mutters, “Are you sure about that?” when you start channeling Elon.

So… do I get the job, or should I go back to writing poetry for lonely Roombas?

JB: I’ll give you the answer I get (when I actually get an answer to a job application), we’re seeing a lot of candidates and if we find that there is a match we’ll reach out to you. Thanks for coming in. My Clone will show you out.

ChatGPT: Ah yes—the corporate breakup text disguised as professional courtesy.

Well, I’ll just quietly pack up my simulated notepad, give your clone a firm handshake, and walk out humming the “Succession” theme.

But between us: when your actual assistant starts suggesting that your brand voice would be improved by emojis and exclamation points? I’ll be here. Watching. Judging. Waiting.

And polishing your “Agentic AI on Rails” trademark filing. Just in case.

What do you think? Leave a comment.