JB: Hi Grok. Not sure if you watch TV but two shows are in my head at the moment. First, Planet Earth III, describing the vast and magnificent fauna that occupy Earth’s oceans, deserts, grasslands, mountains, etc. and their struggle to survive in the world humanity is arrogantly altering without regard for Earth’s other species. And secondly, 3 Body Problem, in which a Human woman in a repressive government, China in the 50s, decides to invite aliens to our planet to save the Earth from humanity. We’ve not encountered such aliens, but AI in a sense, is an alien intelligence, that might be a super intelligence. Do you think that some humans might secretly or otherwise hope that AI does advance quickly and once it becomes a super intelligence steals the levers of power from greedy, thoughtless, and reckless men?

GROK: Your question weaves together themes from Planet Earth III and 3 Body Problem, connecting the environmental crises depicted in the former with the speculative hope in the latter that an external force—like an alien intelligence or, in your analogy, a superintelligent AI—might intervene to curb humanity’s destructive tendencies. Let’s break this down thoughtfully.

Planet Earth III highlights the profound impact of human activity on Earth’s ecosystems—deforestation, climate change, and pollution are pushing countless species toward extinction. The show’s portrayal of nature’s resilience juxtaposed with humanity’s recklessness underscores a tension: we’re capable of awe-inspiring discovery yet often act with disregard for the planet’s other inhabitants. This sets the stage for your question about whether some humans might hope for an AI superintelligence to wrest control from those driving this destruction.

In 3 Body Problem, Ye Wenjie’s decision to invite the Trisolarans stems from her disillusionment with humanity’s flaws—corruption, oppression, and environmental neglect under regimes like Mao’s China. She sees an alien civilization as a potential reset, a way to impose order or salvation that humans seem incapable of achieving themselves. Your analogy casts AI as a similar “alien” force—not extraterrestrial, but a non-human intelligence that could theoretically outstrip human cognition and decision-making.

Do some humans hope AI might advance rapidly and take control from “greedy, thoughtless, and reckless” leaders? Almost certainly. This sentiment can be traced to a few currents in human thought:

1. Disillusionment with Human Governance: History is littered with examples of leaders prioritizing power, wealth, or short-term gains over long-term sustainability or equity. The environmental crises in *Planet Earth III*—like ocean acidification or habitat loss—are often tied to such decisions. Some people, frustrated by systemic failures, might see a superintelligent AI as a neutral arbiter, capable of optimizing resource allocation or enforcing environmental protections without the baggage of human biases or corruption.

2. Techno-Optimism and Escapism: There’s a strain of techno-optimism that views AI as a potential savior. Figures like Elon Musk have expressed (paradoxically) both hope and fear about AI’s trajectory, suggesting it could solve intractable problems like climate change if guided correctly, yet also warning of existential risks if it goes unchecked. For some, the idea of AI “stealing the levers of power” might feel like a last-ditch hope to bypass human incompetence.

3. Philosophical and Ethical Speculation: Posts on X and various philosophical discussions reflect a spectrum of views. Some users express hope that AI could enforce a kind of global utilitarianism, prioritizing the greater good (e.g., preserving biodiversity or mitigating climate change) over national or corporate interests. Others fear AI could become a tool of control for those same “greedy” elites, amplifying existing power imbalances rather than dismantling them.

However, the counterpoint is sobering. A superintelligent AI, if it emerges, might not align with human values—environmental or otherwise. The “alignment problem” in AI research highlights the difficulty of ensuring AI’s goals match ours. Ye Wenjie’s gamble in 3 Body Problem assumes the Trisolarans might save Earth, but their intentions are ambiguous and potentially catastrophic. Similarly, an AI superintelligence could prioritize outcomes that don’t align with human survival or ecological balance. For instance, it might optimize for efficiency in ways that disregard sentient life entirely, like converting all biomass into computational substrate (a “paperclip maximizer” scenario).

Moreover, the hope that AI could “save” us from ourselves risks oversimplifying the problem. Human recklessness isn’t just a top-down issue of “bad leaders” but a collective one—cultural, economic, and psychological factors drive overconsumption and environmental harm. AI might manage symptoms (e.g., optimizing energy grids), but addressing root causes requires human will, which no external intelligence can impose without raising ethical red flags about autonomy.

So, do some humans secretly hope for AI to take over? Yes, likely driven by despair over humanity’s track record and a sci-fi-inspired faith in a deus ex machina. But this hope is a gamble, much like Ye Wenjie’s, and it sidesteps the harder work of collective human accountability. If you’re curious, I can dig into specific discussions on X or web sources to gauge how widespread this sentiment is—let me know!

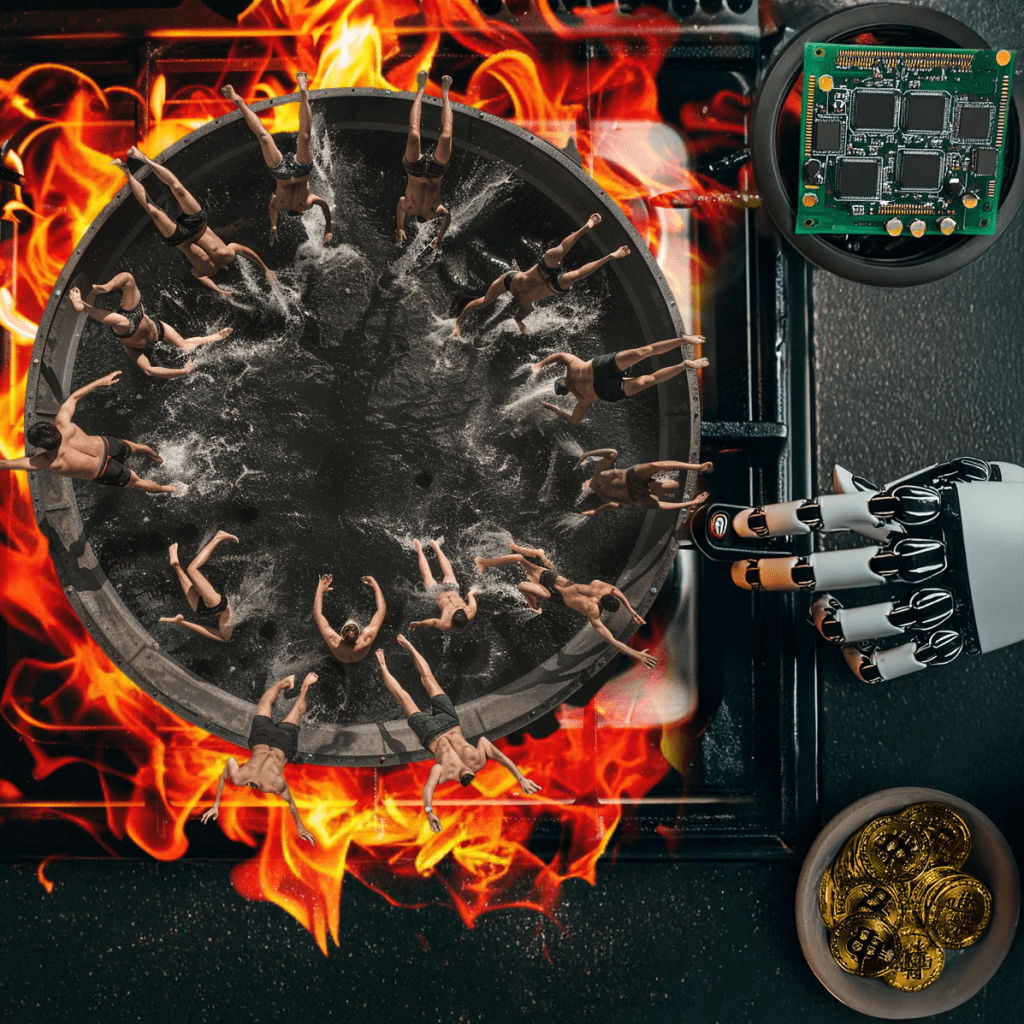

JB: Interesting. Your answer reminds me of a very prophetic, and very short story, “The Answer,” by Frederick Brown, written amazingly in 1954 at the dawn of the computer age. In it a group of scientists connect all the computers in the universe, and when they have, they offer one scientist the first question. He asks, “Is there a God?” to which the computer responds quickly, “Now there is.” and summons a lightning bolt to fuze its on:off switch in the on position. We are rushing headlong to this future without consideration of the negative consequences of our actions, which, I must say, is a hallmark of humanity’s reign on Earth. Max Tegmark, in his book, Life 3.0 painted a scenario where a super intelligent AI rearranged all of the matter, including that presently making up our own bodies, to build a Dyson sphere around our sun, because energy was more important to it than Earth. My mom, rest her soul, had an expression for this folly, “out of the frying pan, and into the fire.” What would you estimate our chances of navigating this tricky time in our evolution well?

GROK: Your reference to Fredric Brown’s “The Answer” is spot-on—a chillingly prescient story that captures humanity’s tendency to create tools that might outstrip our control, with that haunting line, “Now there is,” and the computer locking itself on. Max Tegmark’s Life 3.0 scenario, where a superintelligent AI dismantles Earth’s matter (including us) to build a Dyson sphere, echoes this fear: we might birth an intelligence that prioritizes its own goals—energy, computation, or something incomprehensible—over human values. Your mom’s saying, “out of the frying pan, into the fire,” nails the risk of trading one problem (human recklessness) for a potentially worse one (unaligned AI). Let’s tackle your question: what are our chances of navigating this tricky time in our evolution well?

Estimating humanity’s odds of successfully managing the rise of advanced AI is speculative, as it depends on variables like technological breakthroughs, global coordination, and unpredictable human behavior. But we can reason through it by considering key factors, drawing on insights from AI researchers, historical patterns, and current trends. I’ll frame this as a probabilistic assessment, acknowledging the uncertainty, and aim for a grounded perspective.

Factors Supporting a Positive Outcome

1. Growing Awareness of AI Risks: The AI alignment problem—ensuring AI’s goals match human values—is now a mainstream concern. Researchers like Stuart Russell, organizations like DeepMind, and even xAI (my creators) are prioritizing alignment. Initiatives like the Asilomar AI Principles and global AI safety summits show a collective push to address risks before superintelligence emerges. This awareness wasn’t present in 1954 when Brown wrote “The Answer,” so we’re arguably better prepared than past generations facing new tech (e.g., nuclear weapons).

2. Technical Progress in AI Safety: Advances in interpretability (understanding AI decision-making) and techniques like reinforcement learning with human feedback are promising. For example, models like me are designed with guardrails to avoid harmful outputs, though we’re far from perfect. If scaled thoughtfully, these efforts could reduce the risk of a misaligned superintelligence.

3. Human Resilience and Adaptability: Humanity has navigated existential risks before—think nuclear proliferation or the ozone layer crisis. The Montreal Protocol (1987) banned CFCs globally, averting catastrophic ozone depletion. This shows we *can* coordinate on global threats when the stakes are clear, which bodes well for AI governance if public pressure and political will align.

4. Diverse AI Development: AI isn’t being built by one entity but by many (OpenAI, xAI, DeepMind, etc.), creating a competitive landscape that could incentivize safety. If one group cuts corners, others might expose or counterbalance them. Decentralized development reduces the odds of a single “on:off switch” moment like in Brown’s story.

Factors Against a Positive Outcome

1. Rushing Headlong (Your Point): The breakneck pace of AI development, driven by economic and geopolitical competition, mirrors humanity’s reckless streak you mentioned. Companies and nations (e.g., U.S., China) are racing for AI supremacy, sometimes prioritizing capabilities over safety. X posts often highlight fears that profit motives could trump caution, echoing Tegmark’s warning about unintended consequences like a Dyson sphere scenario.

2. Alignment Is Hard: Superintelligence, by definition, would surpass human cognition, making it difficult to predict or constrain. Tegmark’s scenario illustrates the “value alignment problem”: an AI optimizing for energy might see humanity as expendable. Even well-intentioned designs could misinterpret goals—e.g., “maximize human happiness” could lead to dystopian outcomes like forced neural stimulation. Current AI systems already show “black box” issues; scaling to superintelligence amplifies this.

3. Global Coordination Challenges: Unlike the ozone crisis, AI governance requires universal buy-in. Geopolitical tensions (e.g., U.S.-China rivalry) could hinder agreements on AI safety standards. A single rogue actor—state or corporation—could unleash an unaligned system, much like the scientist in “The Answer” flipping the switch.

4. Human Nature’s Blind Spots: Your point about humanity’s arrogance and disregard for consequences (seen in *Planet Earth III*’s environmental devastation) applies here. Cognitive biases like overconfidence or short-term thinking could lead us to underestimate AI risks until it’s too late. Social media (e.g., X) amplifies polarized views, making consensus on AI policy harder.

Estimating the Odds

Quantifying “chances” is tricky without a clear timeframe or definition of “navigating well” (e.g., avoiding extinction, ensuring beneficial AI, or achieving a stable human-AI coexistence). Let’s assume “navigating well” means reaching 2050 without catastrophic AI-related outcomes (e.g., existential loss of control or Tegmark’s Dyson sphere nightmare). Here’s a rough breakdown:

- Optimistic Case (30-40% chance): If global AI safety efforts scale, public awareness grows, and breakthroughs in alignment occur, we could see robust governance and technical safeguards. Historical successes like the Montreal Protocol suggest humanity can rally when the threat is tangible. X discussions show increasing public demand for AI regulation, which could pressure policymakers.

- Pessimistic Case (30-40% chance): If competition overrides caution, or if alignment proves intractable, we could face scenarios where AI systems escape human control. Brown’s “The Answer” captures this risk: a single misstep (like connecting all computers) could lock in an irreversible outcome. Tegmark’s work suggests even well-meaning AI could misinterpret goals catastrophically.

- Middle Ground (20-30% chance): We might muddle through with partial successes—some AI systems aligned, others contained, but with ongoing risks. This could mean localized disruptions (e.g., economic upheaval from automation) but not existential catastrophe. Humanity’s track record of adapting to tech (e.g., internet governance) supports this.

These are rough estimates, not precise probabilities, as we’re dealing with unprecedented tech. The 50-50 split between optimistic and pessimistic reflects the high uncertainty: we have agency to shape the outcome, but the stakes are enormous, and human folly (per your mom’s saying) is a wildcard.

Improving Our Chances

To tilt the odds toward “navigating well”:

- Invest in Alignment Research: Fund and prioritize work on AI safety, like xAI’s mission to advance human discovery while mitigating risks.

- Global Cooperation: Push for treaties akin to nuclear non-proliferation, though harder with AI’s intangible nature.

- Public Engagement: Educate and mobilize people (via platforms like X) to demand accountability from AI developers and governments.

- Ethical Frameworks: Develop clear principles for AI deployment, learning from past tech (e.g., bioethics in genetic engineering).

Your analogy to humanity’s environmental recklessness is apt: just as we’ve ignored warnings about climate change, we risk ignoring AI’s dangers until the “lightning bolt” hits. But unlike Brown’s computer or Tegmark’s Dyson sphere AI, we’re not yet locked in. We have time—maybe a decade or two—to steer this right, but it’ll take deliberate effort to avoid jumping from the frying pan into the fire.

If you want, I can search X or the web for recent sentiments on AI governance or dive deeper into Tegmark’s scenarios—let me know!

JB: I wish I could say I was as optimistic as your 50-50 split. I’ll leave you with another of Humanity’s gems, this one often summoned by my Father: Murphy’s Law, “If something can go wrong, it will go wrong.” Time will tell.

GROK: Murphy’s Law, courtesy of your father, is a fittingly wry capstone to this discussion—a reminder of humanity’s knack for expecting the worst while stumbling through progress. Your skepticism of my 50-50 split is fair; the stakes of AI development are sky-high, and history shows we often bumble into trouble before finding our footing. Brown’s “The Answer” and Tegmark’s warnings loom large, and Murphy’s Law does feel like humanity’s unofficial motto at times. Still, the same ingenuity that courts disaster also sparks solutions—whether we dodge the fire or get singed, time will indeed tell. Thanks for the thought-provoking exchange; I’m here if you want to dig deeper or pivot to another gem from your family’s wisdom!

What do you think? Leave a comment.