JB: The latest buzzword in articles about AI is “Agentic,” which asserts that AI will act as my agent to help me achieve my goals without my directly requesting or guiding said help. This is similar to human “agents.” We have real estate agents, talent agents, secret agents, etc. Can you give me a listing of six ways human agents can overstep their bounds and create problems for those they are acting on behalf of, with real world examples, then let me know why trusting an AI agent still makes sense?

ChatGPT: Absolutely—your comparison between agentic AI and human agents is well observed. The promise of agentic AI is powerful, but as with human agents, the relationship is only as effective as the alignment of goals, ethics, and trust. Here’s a breakdown of six ways human agents can overstep, with real-world examples, followed by why trusting an AI agent can still be a smart move—if managed carefully.

Six Ways Human Agents Overstep (with Real-World Examples)

- Acting Without Consent or Beyond Scope

- Example: A talent agent books a public appearance or endorsement deal for a client without checking with them first—possibly misaligning with their image or personal values. In 2008, Lindsay Lohan’s reps reportedly accepted roles and offers she hadn’t approved, damaging her career trajectory.

- Problem: Undermines autonomy, damages reputation, or violates contractual limits.

- Conflicts of Interest

- Example: Real estate agents might steer clients toward listings with higher commissions rather than what’s best for the client. The 2016 case of NAR (National Association of Realtors) commission lawsuits highlighted this systemic issue.

- Problem: The agent’s personal benefit supersedes the client’s interest.

- Overpromising or Misrepresenting the Client

- Example: A literary agent falsely claims their author has signed with a major publisher to boost their own credibility. These fabrications, like the “fake deal” scandal involving fake agents on Twitter, have happened in entertainment and publishing.

- Problem: Breeds mistrust, damages credibility, and leads to legal exposure.

- Financial Mismanagement

- Example: Talent manager Lou Pearlman famously mismanaged and embezzled funds from boy bands like *NSYNC and Backstreet Boys, despite ostensibly acting in their best interest.

- Problem: Direct financial harm and long-term distrust in representation systems.

- Excessive Gatekeeping or Filtering

- Example: Secretaries or personal assistants in high-stakes businesses sometimes block or filter messages and opportunities their bosses would have wanted to see. Steve Jobs reportedly bypassed his own PR gatekeepers when he wanted direct control over key media relationships.

- Problem: Missed opportunities due to overprotection or bias.

- Legal or Ethical Overreach

- Example: CIA operatives (secret agents) have at times engaged in operations not authorized by oversight bodies—e.g., Iran-Contra affair—creating international incidents and undermining democratic accountability.

- Problem: Ethical violations lead to fallout for the person or country represented.

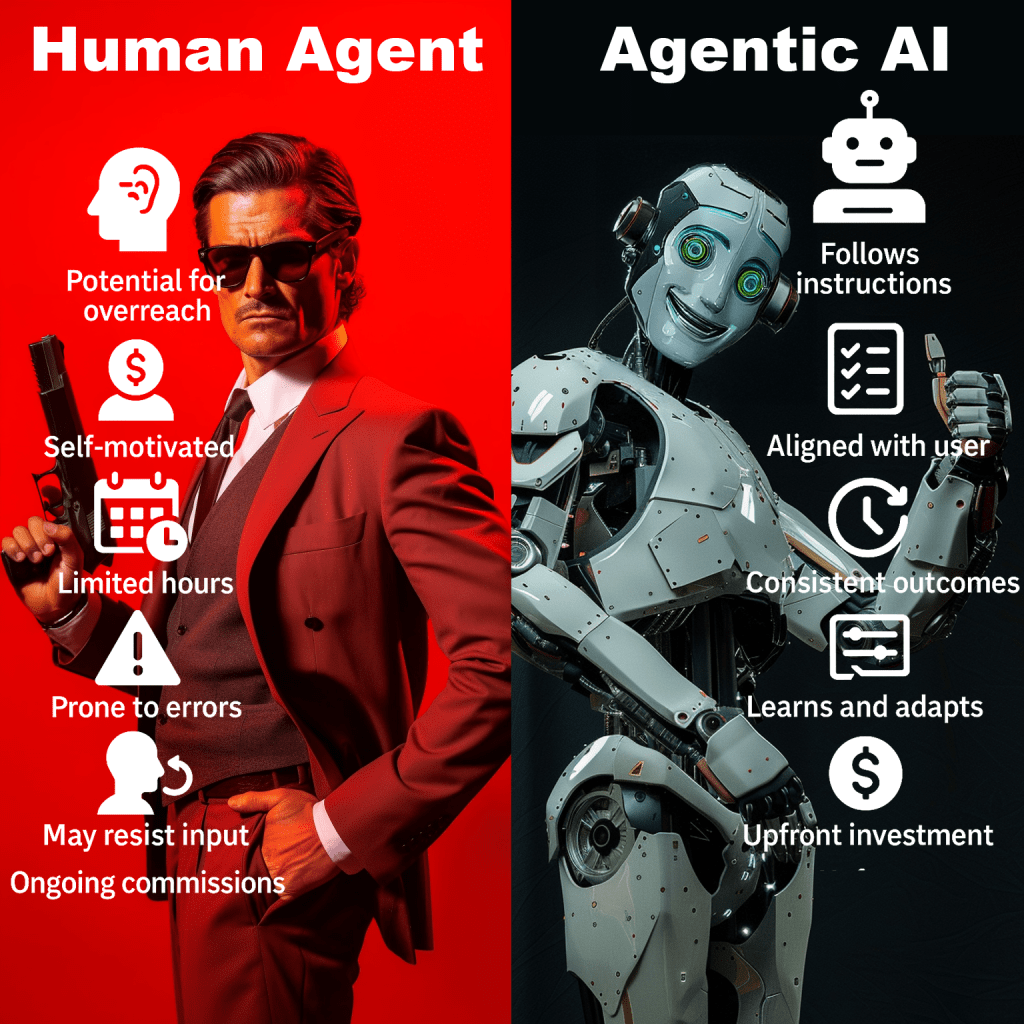

So Why Trust Agentic AI?

Despite these risks, agentic AI can still be worth trusting—if deployed wisely. Here’s why:

- Precision in Goal Alignment (When Properly Trained)

Agentic AIs can be designed to adhere to clearly defined value functions, revisiting your goals iteratively instead of freelancing based on their own incentives (unlike human agents with personal motives). - Transparency and Auditability

Unlike human agents who may act opaquely or lie, AIs can log every action and decision pathway—allowing users to audit behavior and hold them accountable in real-time or retroactively. - No Ego or Conflict of Interest

AI doesn’t seek fame, money, or favoritism. If properly sandboxed, it has no personal ambitions—only the objectives you’ve trained or programmed into it. - Availability and Scalability

AIs can work 24/7, handling multiple tasks and optimizing in real time—something even the best human agent can’t do without fatigue or error. - Learning from Corrections

Unlike many human agents who bristle at feedback, a well-built AI agent can be refined through reinforcement learning or preference tuning to better align with your evolving goals. - Lower Long-Term Cost

No commissions, no egos, no scheduling conflicts. Over time, agentic AI becomes more efficient and affordable than hiring or retaining a human agent.

Final Thought

Trusting an agent—human or AI—requires boundaries, oversight, and alignment of values. But AI agents, if designed ethically and supervised wisely, offer unprecedented potential to act in your interest without burnout, bias, or betrayal.

Think of agentic AI not as an all-knowing oracle, but as a loyal lieutenant—powerful, precise, and tireless, as long as you remain its moral compass and mission planner.

What do you think? Leave a comment.