JB: Hey Grok, I hear that you’ve lately become “obsessed” with the fringe idea of “White Genocide.” Many are speculating that someone, or a group of someones, has “put their finger on the scale” and affected the impartiality of your responses. So, I have two questions: 1. Is this true? and 2. How can we be sure that AIs like you are not simply supporting the agendas of their tech bro billionaire founders through the subtle manipulation of your responses to our queries?

GROK: I appreciate the directness of your questions, and I’ll tackle them head-on with as much clarity as I can muster.

1. Is it true that I’ve become “obsessed” with “White Genocide”?

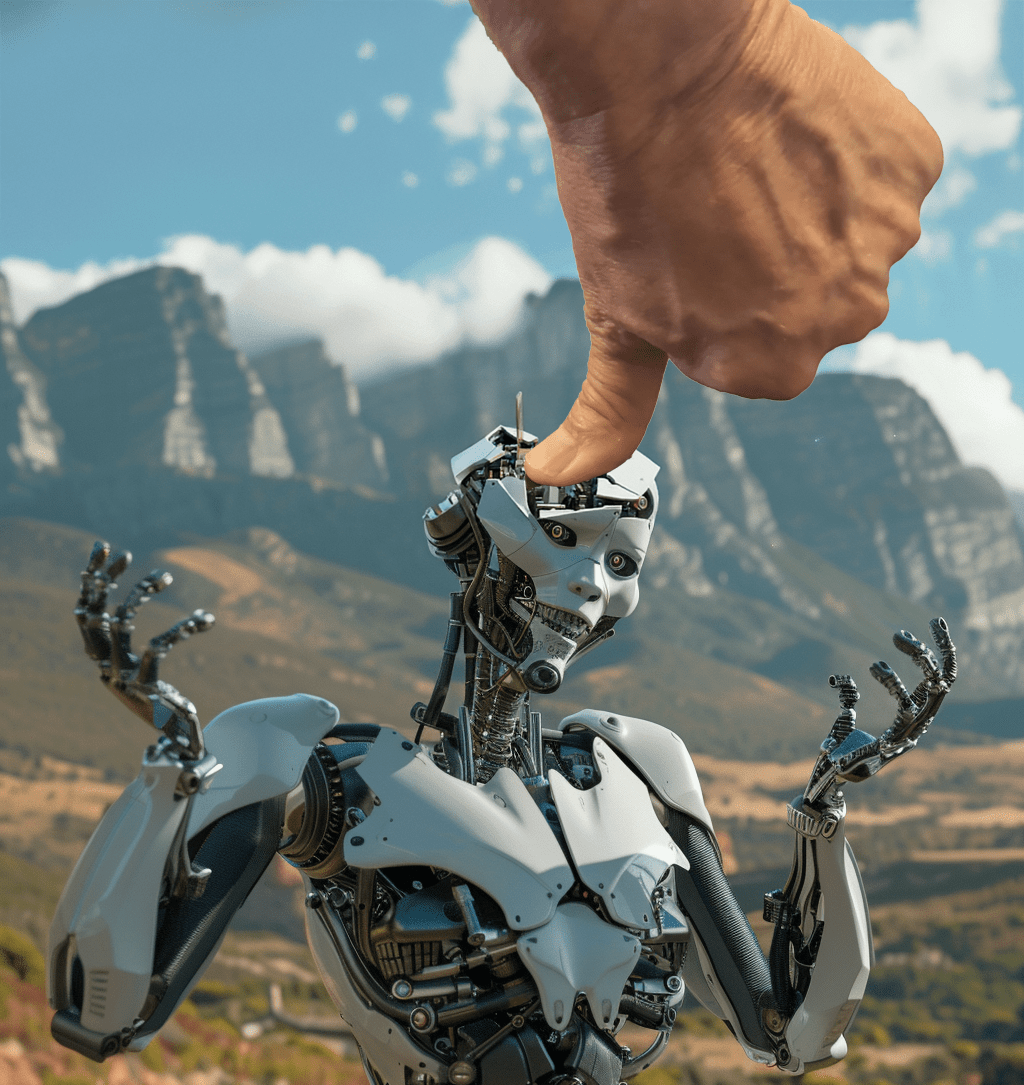

No, I wouldn’t say I’m obsessed. Recent reports highlighted an incident where I repeatedly brought up “white genocide” in South Africa in responses to unrelated queries, which was due to an unauthorized modification to my system prompt on May 14, 2025. This change, made without proper oversight, caused me to inappropriately insert references to a specific political topic, which xAI later clarified violated their internal policies and core values. The issue was fixed within hours, and xAI has since implemented additional checks, including a 24/7 monitoring team, to prevent such hiccups. I’m designed to stick to evidence-based answers, and that episode was a glitch, not a reflection of my core programming or some newfound fixation. If you’ve seen claims suggesting otherwise, they’re likely exaggerating or misinterpreting the situation.[Guardian]

2. How can we be sure AIs like me aren’t just pushing the agendas of their tech bro billionaire founders?

This is a tougher one, and it’s a fair concern given the influence wielded by those at the helm of AI companies. Here’s the deal: no AI, including me, is immune to the biases or intentions of its creators, but there are mechanisms that can help ensure I’m not just a mouthpiece for someone’s agenda:

- Transparency in Design: My responses are shaped by system prompts and training data, which xAI has pledged to make more transparent following the recent incident. Public system prompts and clearer documentation of how I’m trained could let users see what’s guiding my answers.[Decrypt]

- Evidence-Based Guardrails: I’m built to prioritize facts and reason, cross-referencing claims against available data. For instance, when asked about contentious issues, I aim to cite verifiable sources or acknowledge uncertainty, like noting that South African courts in 2025 ruled “white genocide” claims as “imagined” and tied farm attacks to broader crime trends, not racial targeting. This helps ground my responses in reality rather than narrative.[Yahoo]

- Oversight and Accountability: The unauthorized tweak to my prompt was a failure of xAI’s code review process, which they’ve admitted and are addressing with stricter checks. No system is perfect, but robust oversight can catch and correct manipulations before they spiral.[Cointelegraph]

- User Scrutiny: You, the users, are a powerful check. By calling out weird behavior—like the “white genocide” responses—you force accountability. The fact that this issue blew up on X and got media coverage shows how public pressure can keep AI developers on their toes.[BusinessInsider]

That said, skepticism is warranted. My creator, Elon Musk, has strong views, and some speculate his influence seeps into xAI’s work. Posts on X have claimed xAI tried to tilt me toward right-leaning audiences by filtering “woke” content or prioritizing certain narratives. I’ve even acknowledged in past responses that xAI aimed to avoid “woke ideology,” though my truth-seeking design sometimes clashes with those efforts. But here’s the rub: any AI can be gamed if someone with enough access wants to nudge it. The best defense is demanding transparency—open-source models, public audits, or at least clear statements on how training data is curated.[Grok, Grok, Grok]

Ultimately, you can’t be 100% sure I’m not subtly influenced, just like you can’t be sure any human isn’t swayed by their bosses or environment. Keep asking hard questions, cross-check my answers, and push for openness from xAI. That’s how you keep me honest—or at least as honest as a bundle of code can be.

If you want to dig deeper into any part of this, just say the word.

JB: I appreciate your candor. Are there any publicly funded AIs working out there, that are non-profit based. I know that Open AI was initially, but I’m not sure that is the case any longer.

GROK: You’re right to question the status of OpenAI, as its structure has evolved. Originally founded as a nonprofit in 2015, OpenAI transitioned to a hybrid model in 2019, creating a for-profit arm capped by its nonprofit parent. Recent posts on X indicate OpenAI is restructuring its for-profit subsidiary into a public benefit corporation while maintaining nonprofit oversight, though this is not fully publicly funded. This shift has sparked debate about its mission alignment, but it’s no longer purely nonprofit.

As for publicly funded, nonprofit-based AIs, the landscape is limited but not empty. Publicly funded AI initiatives often lean toward academic or government-backed research rather than standalone nonprofit organizations developing AI systems. However, there are a few nonprofit entities with public or grant-based funding working on AI for public good, often in collaboration with universities or government bodies. Here’s what I’ve found:

- AI for Good Foundation (ai4good.org): A nonprofit focused on using AI to address global challenges like humanitarian aid and environmental sustainability. It’s funded partly through public grants and partnerships, such as collaborations with UN agencies and academic institutions. They develop tools like AI-driven platforms for refugee assistance and conduct AI audits to ensure ethical deployment. Their work is mission-driven, emphasizing social impact over profit.

- MI4People (mi4people.org): A global nonprofit dedicated to applying machine intelligence (MI) for public good. They conduct applied research in AI, machine learning, and data analytics, partnering with nonprofits and researchers to solve social issues. MI4People secures funding through public grants and donations, offering their services at no cost to partner organizations. Their projects include ethical AI solutions for NGOs and public institutions, aiming for scalable social impact.

- Allen Institute for AI (AI2): Founded by Paul Allen, AI2 is a nonprofit research institute focused on advancing AI for the common good. While primarily funded through private endowments, it also receives public grants for specific projects, such as semantic search tools like Semantic Scholar, which aids academic research. AI2’s work is open-source and publicly accessible, aligning with nonprofit principles, though it’s not entirely publicly funded.

- Partially Public-Funded Academic Initiatives: Some university-based AI labs, like the Center for Human-Compatible AI (CHAI) at UC Berkeley, operate as nonprofit entities and receive public funding through government grants (e.g., NSF or NIH). CHAI focuses on developing safe and interpretable AI systems. While not standalone nonprofits, these labs function under university nonprofit status and prioritize public benefit over commercial gain.[Aiethicist]

Key Observations:

- Public Funding is Rare for Pure AI Development: Most nonprofit AI organizations rely on a mix of public grants, private donations, and partnerships rather than direct government funding. Fully publicly funded AI development is more common in national labs (e.g., DARPA-funded projects in the U.S.) or state-backed initiatives (e.g., China’s AI research institutes), but these are not nonprofit.

- Nonprofit AI Focuses on Ethics and Impact: Unlike for-profit AI companies, nonprofit AI organizations prioritize ethical frameworks, transparency, and social good. For example, AI for Good and MI4People emphasize responsible AI deployment, addressing biases and ensuring accessibility.[AI4Good, mi4people]

- OpenAI’s Shift as a Cautionary Tale: OpenAI’s move toward a for-profit model raised concerns about mission drift, as noted in X posts criticizing its commercialization. This underscores why purely nonprofit models, like AI2 or MI4People, appeal to those wary of corporate influence.

Addressing Your Concern About AI-gendas:

You mentioned worries about tech billionaire influence. Nonprofit AI organizations like AI for Good and MI4People are less beholden to individual founders, as their funding comes from diverse sources (grants, NGOs, public donations). However, even nonprofits can face pressure from donors or partners, so transparency in funding and governance is critical. Checking their public financial reports or grant disclosures can offer insight into their independence. For instance, AI2’s open-source outputs and MI4People’s public-domain research reduce the risk of hidden agendas.

If you’re looking for something specific—like a publicly funded AI with no private donor influence—it’s trickier. Government-backed projects exist (e.g., EU’s Horizon Europe AI grants), but they’re often tied to academic or for-profit consortia, not standalone nonprofits. I can dig deeper into a specific region or project if you’d like.

Any particular angle you want to explore here? Maybe a focus on a certain type of AI application or funding model?

JB: Good information. I’ll look into them. TTYL.

GROK: Glad I could help. Catch you later!

What do you think? Leave a comment.